Breaking the Attention Trap in Code LLMs#

Note

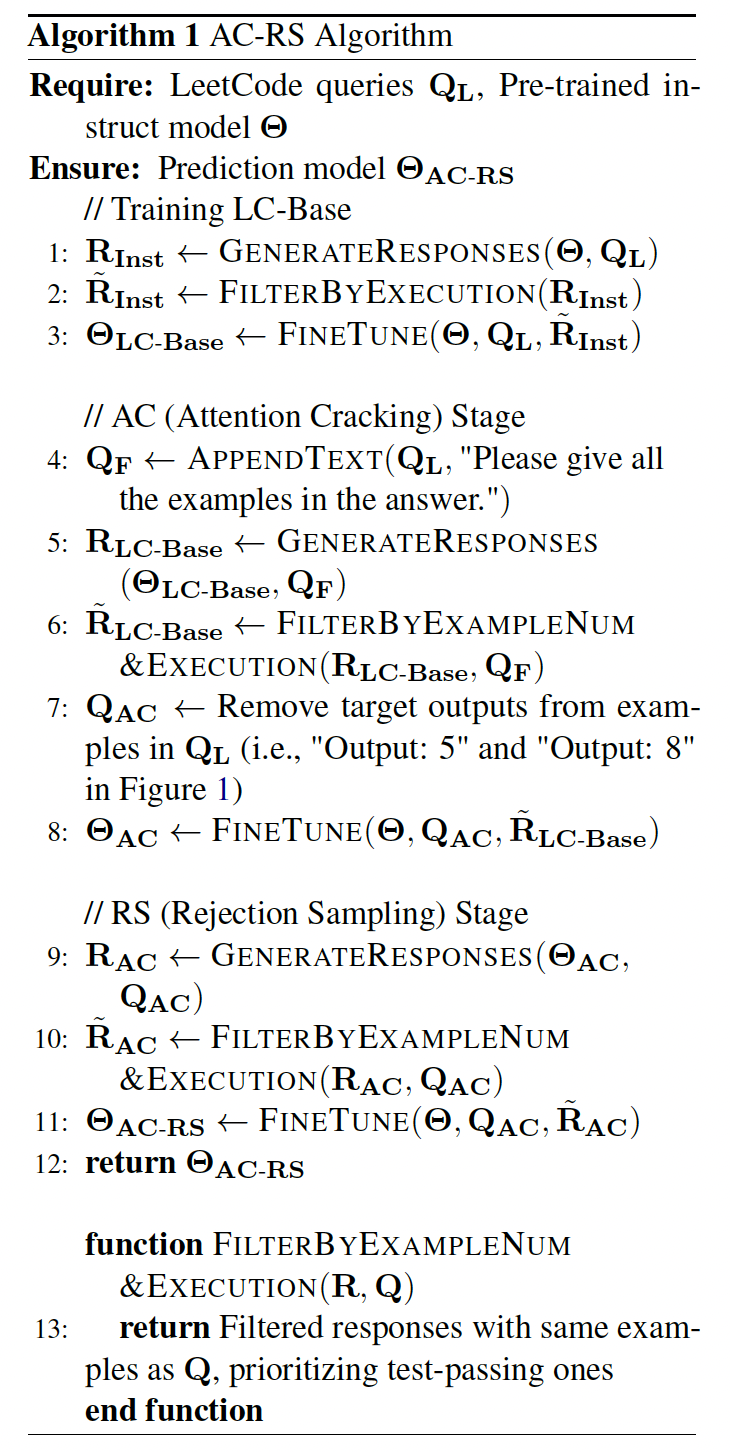

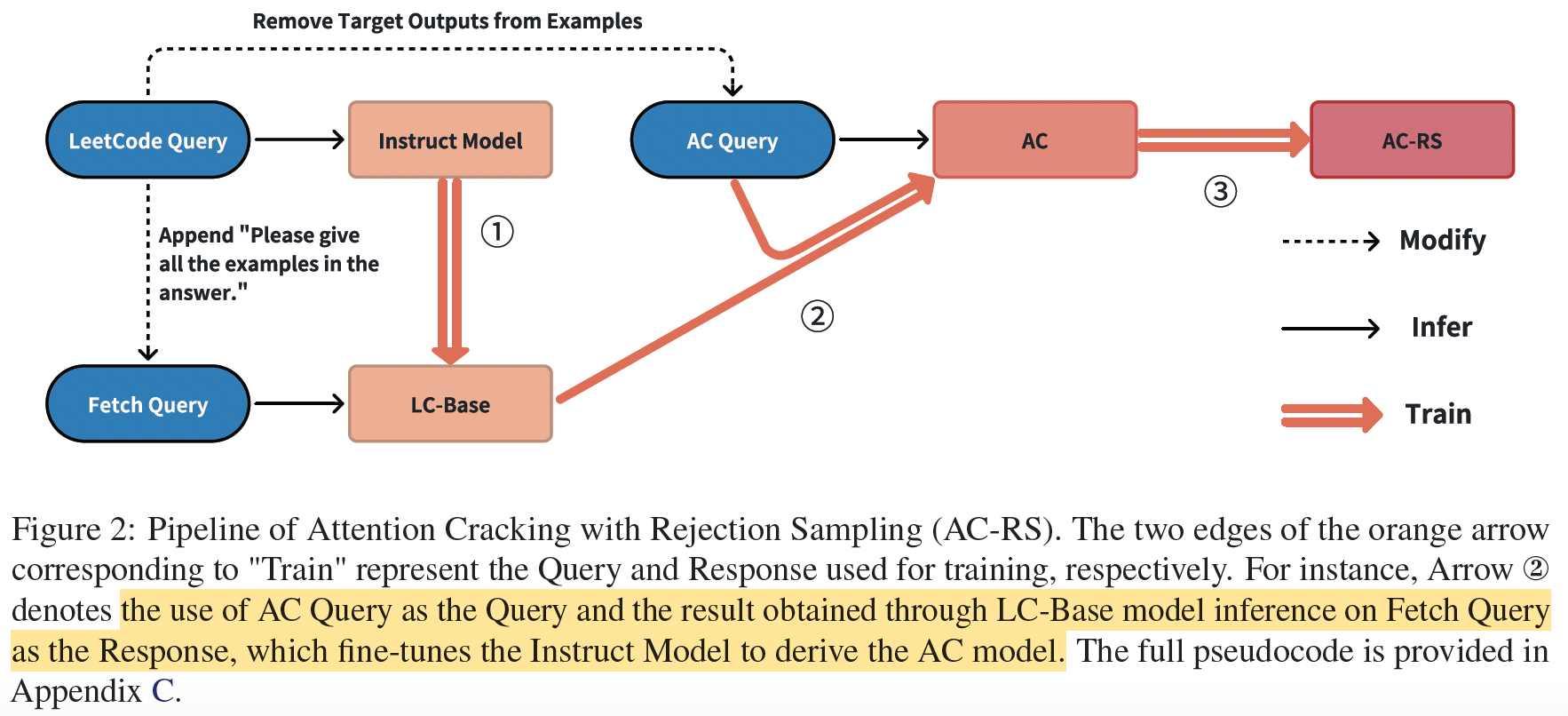

This study identifies that, the Attention Trap phenomenon in training data constitutes a key constraint on model performance. To address this phenomenon, we propose the Attention Cracking with Rejection Sampling (AC-RS) method. Our method contains two stages:

Attention Cracking (AC) modifies training data to eliminate attention traps.

Rejection Sampling (RS) employs self-generated model outputs for secondary training, preventing performance degradation from manual data modifications.

Method#

To ensure fair comparison and better prepare for subsequent training data generation, we first train LC-Base model using Leetcode data through Rejection Sampling.

Attention Cracking#

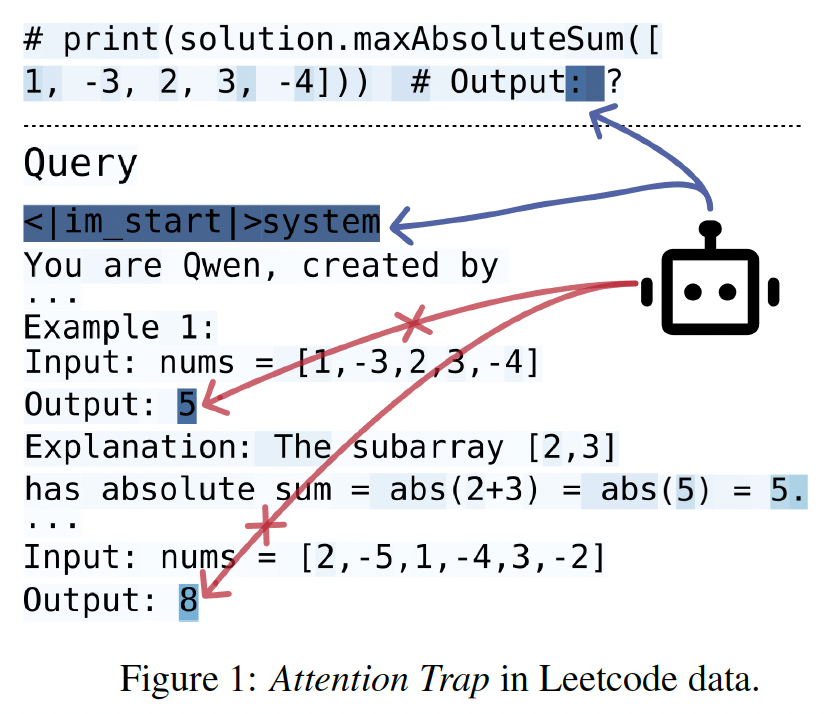

By removing target outputs from queries, we prevent models from relying on superficial pattern matching. This forces models to allocate attention to problem descriptions and generated code for output reasoning. The AC stage modifies LeetCode queries through two operations:

AC Queries: Remove target outputs from examples in original queries for training. (i.e., Remove “Output: 5” and “Output: 8” in Figure 1)

Fetch Queries: Append “Please give all the examples in the answer.” at query endings, increasing the likelihood of including examples in retrieved results.

Fetch Queries collect generation results from the LC-Base model. Generated results are processed by selecting responses with the same examples as queries, prioritizing those passing tests. Finally, we fine-tune the Instruct model using AC Queries and processed results to drive the AC model.

Rejection Sampling#

To prevent performance degradation from AC stage data modifications, we introduce a RS stage. This mechanism directly uses AC queries to obtain outputs from AC-trained models, eliminating attention distortion caused by query-output mismatches.