Seed1.5-Thinking#

Note

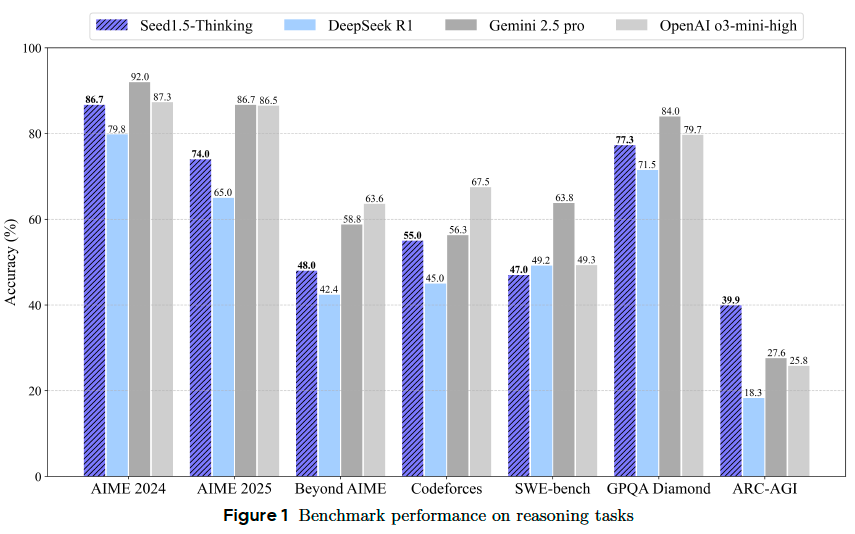

Compared to other state-of-the-art reasoning models, Seed1.5- Thinking is a Mixture-of-Experts (MoE) model with a relatively small size, featuring 20B activated and 200B total parameters.

RL Training Data#

Verifiable Problems#

For coding problems, we prioritize the source of high-quality and challenging algorithmic tasks, primarily drawn from esteemed competitive programming contests.

We filter data to ensure that each problem includes a comprehensive specification: a clear problem description, a set of unit tests, and a checker script. Unit tests validate the functional correctness of solutions, while the checker script enforces additional constraints such as output formatting and edge cases. We also perform difficulty filtering, ensuring that problems possess an appropriate level of complexity and applicability to real-world algorithmic reasoning.

Tip

For evaluation, the most accurate form is to submit the generated code to the official platforms. However, during reinforcement learning, real-time submission isn’t feasible. Thus, we developed an off-line evaluation set for efficient local validation.

Non-verifiable Problems#

We discard data with low sample score variance and low difficulty. To be specific, we use the SFT model to

generate multiple candidates for each prompt and then score them using a reward model. For these non-verifiable data, we employ a pairwise rewarding method for scoring and RL training.

Reward Modeling#

We have designed two progressive reward modeling solutions:

Seed-Verifier is based on a set of meticulously crafted principles written by humans. It leverages the powerful foundational capabilities of LLMs to evaluate a triplet consisting of the

question, reference answer, and model-generated answer.Seed-Thinking-Verifier is inspired by the human judgment process, which generates conclusive judgments through meticulous thinking and in-depth analysis. To achieve this, we trained a verifier that provides a detailed reasoning path for its evaluations. Specifically, we treated this as a verifiable task and optimized it alongside other mathematical reasoning tasks.

Supervised Fine-Tuning#

We curate an SFT data comprising 400k training instance, including 300k verifiable problems and 100k non-verifiable problems. Verifiable prompts are randomly sampled from RL training set. To generate high-quality responses with long CoT, we employ an iterative workflow that integrates model synthesis, human annotation, and rejection sampling.

Reinforcement Learning#

In the context of long-CoT RLHF, we encounter several challenges such as value model bias and the sparsity of reward signals. To address these issues, we draw on key techniques from our prior work

Value-Pretraining: We sample responses from a fixed policy, such as \(\pi_{\text{sft}}\), and update the value model using the Monte-Carlo return.

Decoupled-GAE: By employing different Generalized Advantage Estimation (GAE) parameters, such as \(\lambda_{\text{value}} = 1.0\) and \(\lambda_{\text{policy}} = 0.95\), we allow the value model to update in an unbiased manner.

Length-adaptive GAE: We set \(\lambda_{\text{policy}} = 1 - \frac{1}{\alpha l}\) where \(\alpha\) is a hyper-parameter and \(l\) is the response length.

Clip-Higher: In the Proximal Policy Optimization (PPO) algorithm, we decouple the upper and lower clip bounds by increasing the value of \(\epsilon_{\text{high}}\), we create more room for the increase of low-probability tokens.

Token-level Loss: Instead of defining the policy loss over entire responses, we define it over all tokens.

Positive Example LM Loss: This loss function is designed to boost the utilization efficiency of positive samples during the RL training process. We add a language model loss with a coefficient \(\mu\) for positive examples:

Tip

When merging data from different domains and incorporating diverse scoring mechanisms, we face the challenge of interference between different data domains. To counteract this, we introduce Online Data Distribution Adaptation. This method transforms the stationary prompt distribution during reinforcement learning into an adaptive distribution.