Reflexion#

Note

Recent works such as ReAct have demonstrated the feasibility of autonomous decision-making

agents that are built on top of a large language model (LLM) core. Such approaches have been

so far limited to using in-context examples as a way of teaching the agents. In this paper, we propose an alternative approach called Reflexion that uses verbal reinforcement

to help agents learn from prior failings.

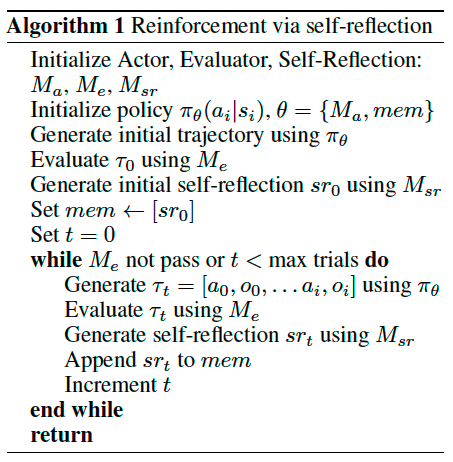

Reflexion: reinforcement via verbal reflection#

Reflexion utilizing three distinct models: an Actor, an Evaluator and a Self-Reflection models.

Actor The Actor is built upon a large language model (LLM) that is specifically prompted to generate the necessary text and actions conditioned on the state observations. We explore various Actor models, including Chain of Thought and ReAct.

Evaluator The Evaluator component of the Reflexion framework plays a crucial role in assessing the quality of the generated outputs produced by the Actor. For reasoning tasks, we explore reward functions based on exact match (EM) grading, ensuring that the generated output aligns closely with the expected solution.

Self-reflection Given a sparse reward signal, such as a binary success status (success/fail), the current trajectory, and its persistent memory mem, the self-reflection model generates nuanced and specific feedback.

Memory In the RL setup, the trajectory history serves as the short-term memory while outputs from the Self-Reflection model are stored in long-term memory.

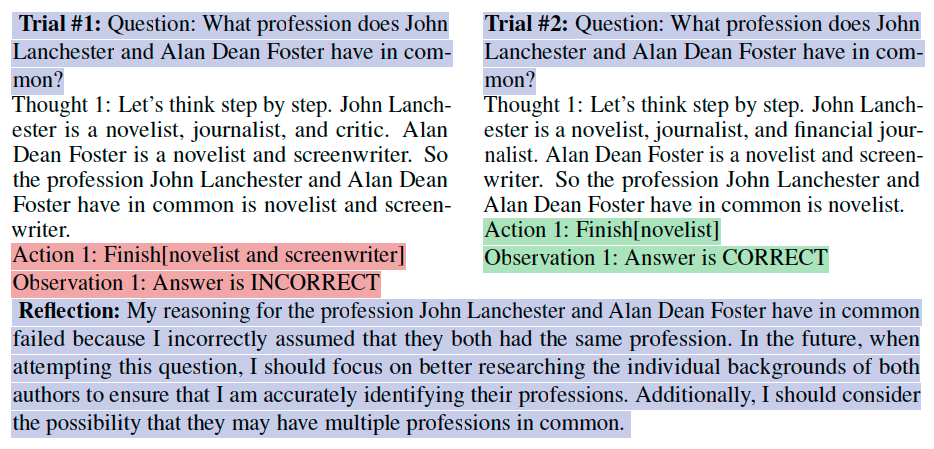

The Reflexion process