WizardCoder#

Note

WizardCoder empowers Code LLMs with complex instruction fine-tuning, by adapting the Evol-Instruct method to the domain of code.

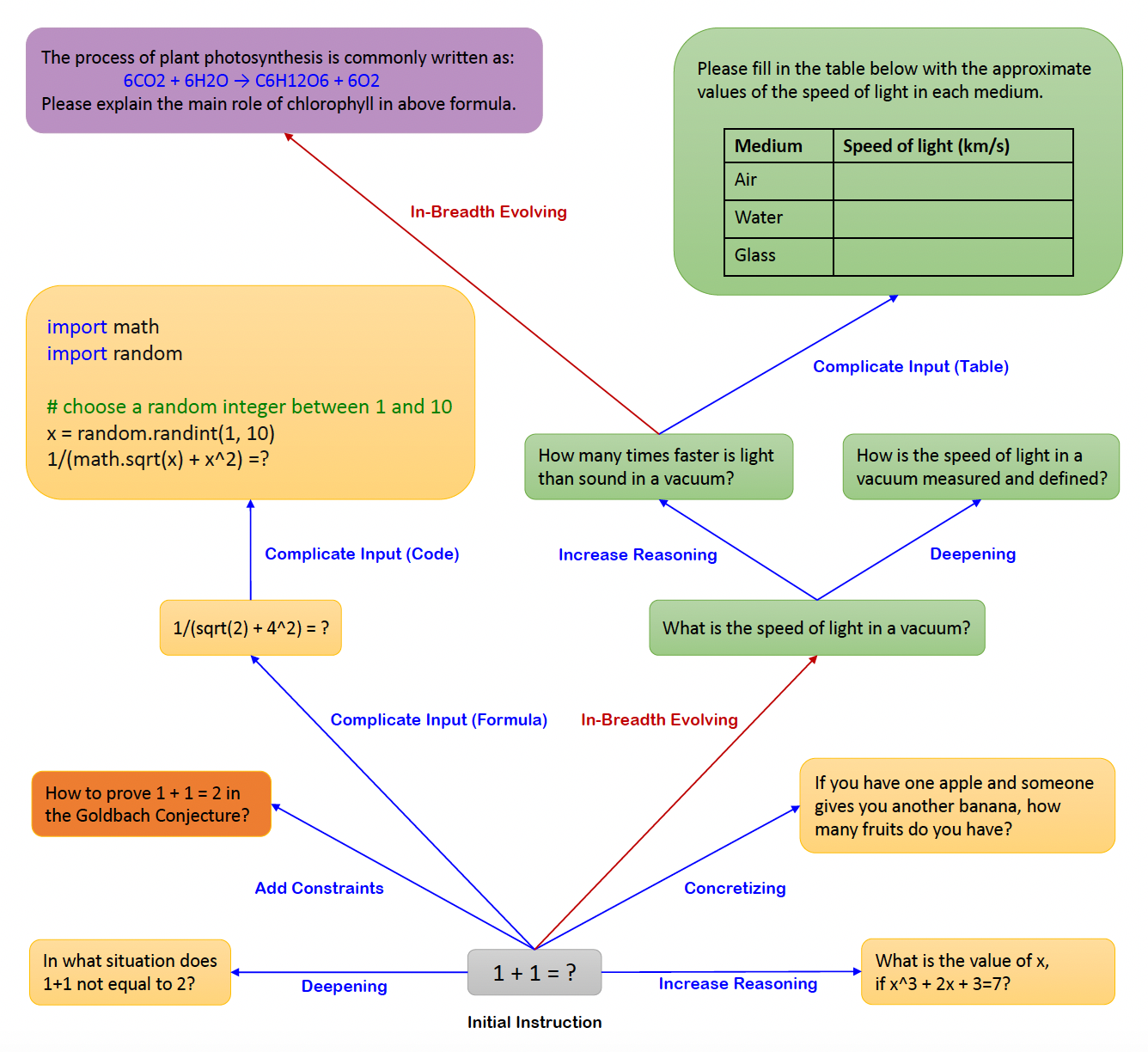

Evol-Instruct#

Starting from a simple initial instruction “1+1=?”, our method randomly selects In-depth Evolving (blue direction line) or In-breadth Evolving (red direction line) to upgrade the simple instruction to a more complex one or create a new one (to increase diversity). The In-depth Evolving includes five types of operations: add constraints, deepening, concretizing, increase reasoning steps, and complicate input. The In-breadth Evolving generating a completely new instruction based on the given instruction. These six operations are implemented by prompting an LLM with specific prompts.

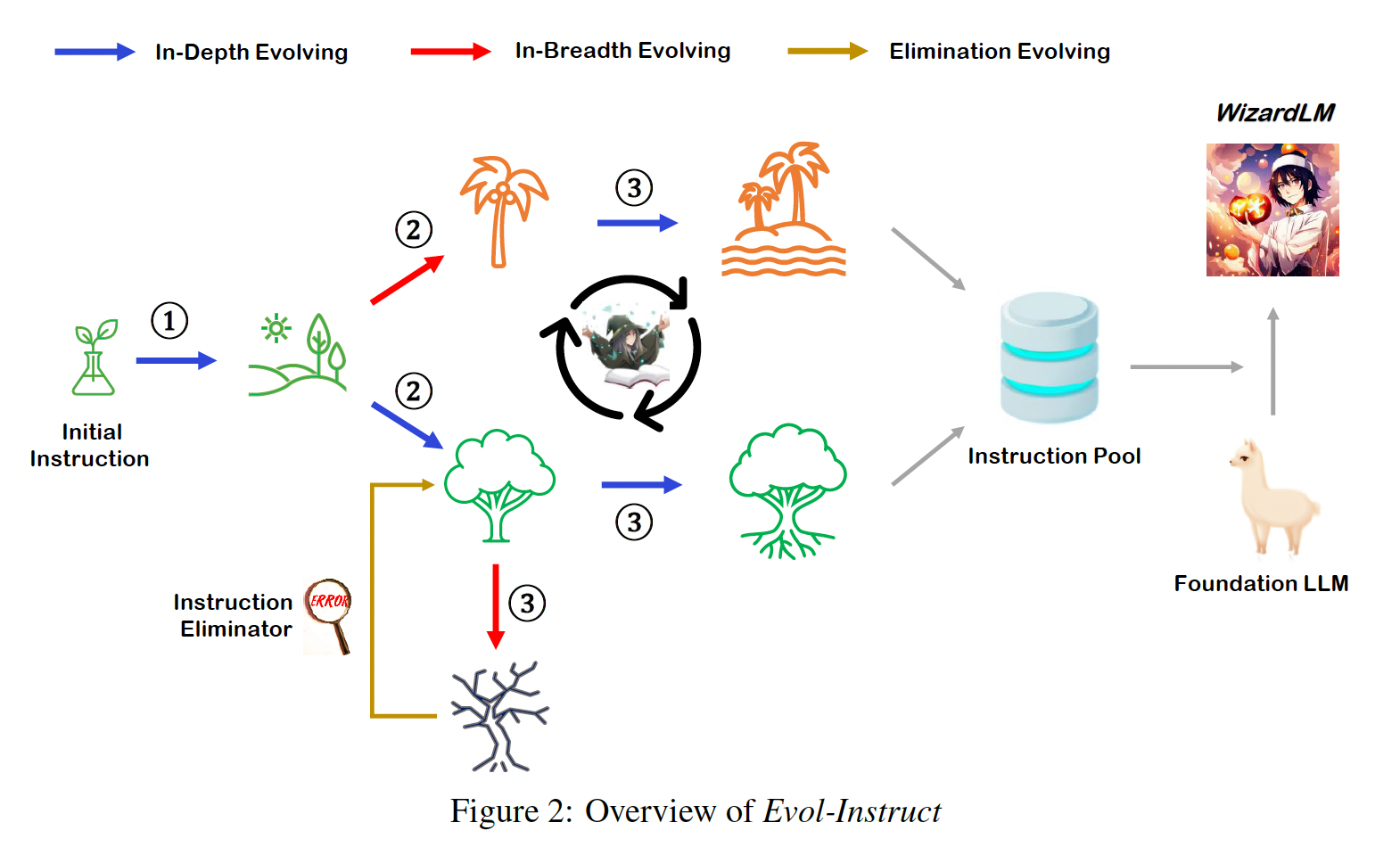

WizardLM#

We start the evolution from a given initial instruction dataset \(D^{(0)} = (I^{(0)}_{k}, R^{(0)}_{k})_{1\le k\le N}\), where \(I^{(0)}_{k}\) is the \(k\)-th instruction in \(D^{(0)}\), \(R^{(0)}_{k}\) is the corresponding response for the \(k\)-th instruction, and \(N\) is the number of samples in \(D^{(0)}\).

In each evolution, we upgrade all the \(I^{(t)}\) in \(D^{(t)}\) to \(I^{(t+1)}\) by applying a

LLM instruction evolution prompt, and then use the LLM to generate corresponding responses \(R^{(t+1)}\) for the newly evolved \(I^{(t+1)}\). Thus, we obtain an evolved instruction dataset \(D^{(t+1)}\). We execute above

process using ChatGPT.

Evol-Instruct Prompts for Code#

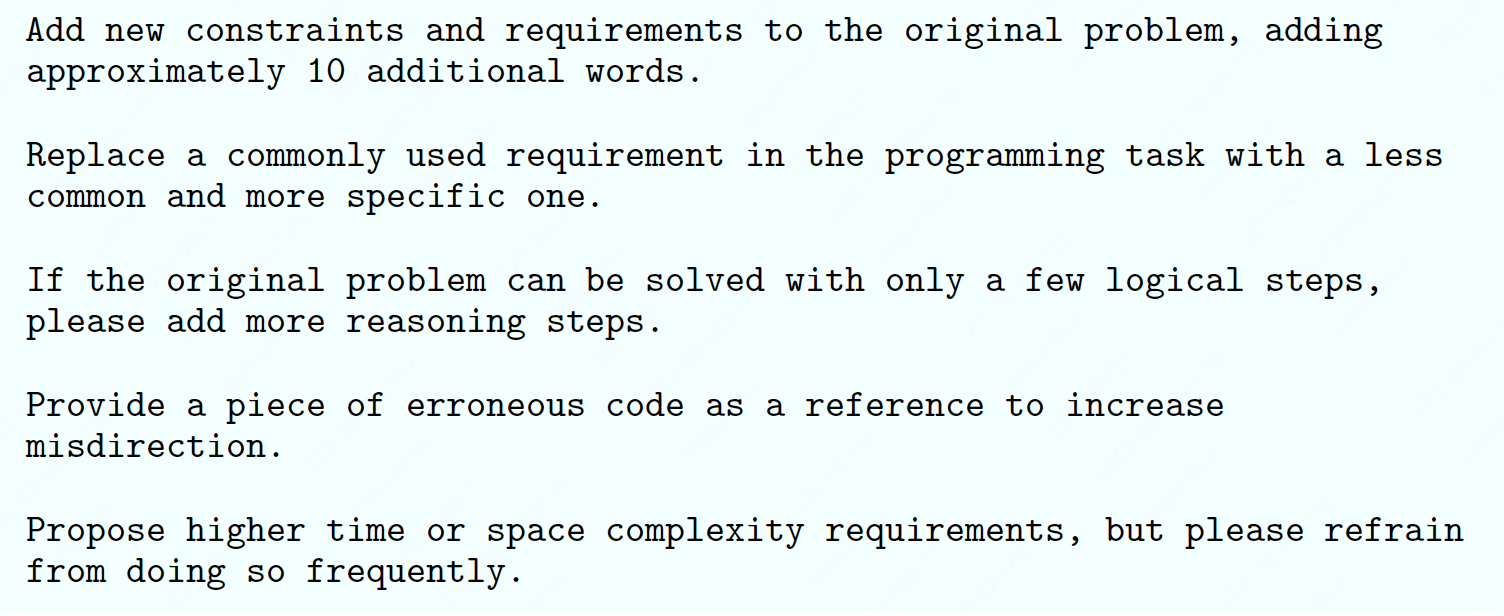

To adapt Evol-Instruct to the realm of code, we made the following modifications to the evolutionary prompt:

Streamlined the evolutionary instructions by removing deepening, complicating input, and In-Breadth Evolving.

Simplified the form of evolutionary prompts by unifying the evolutionary prompt template.

Addressing the specific characteristics of the code domain, we added two evolutionary instructions: code debugging and code time-space complexity constraints.

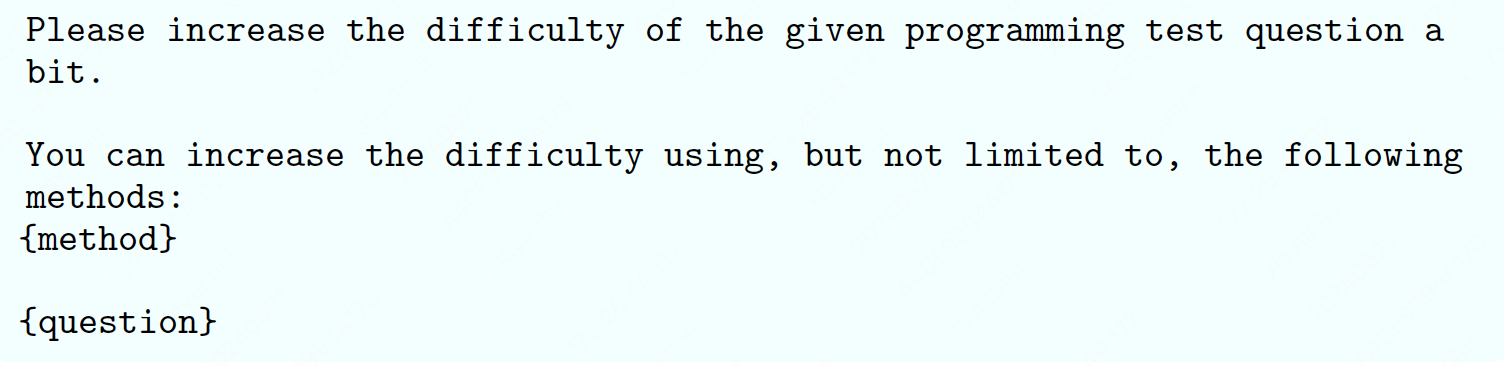

The unified code evolutionary prompt template is as follows:

Here, {question} represents the current code instruction awaiting evolution, and {method} is the type

of evolution. The five types we used are listed as follows:

To construct the training dataset, we initialized it with the 20K instruction-following dataset called Code Alpaca. We iteratively employ the Evol-Instruct technique on this dataset, we merge the evolved data from all previous rounds with the original dataset to finetune StarCoder and assess the pass@1 metric on HumanEval. Once we observe a decline in the pass@1 metric, we will discontinue the usage of Evol-Instruct and choose the model with the highest pass@1 as the ultimate model.