Agents in Software Engineering#

Note

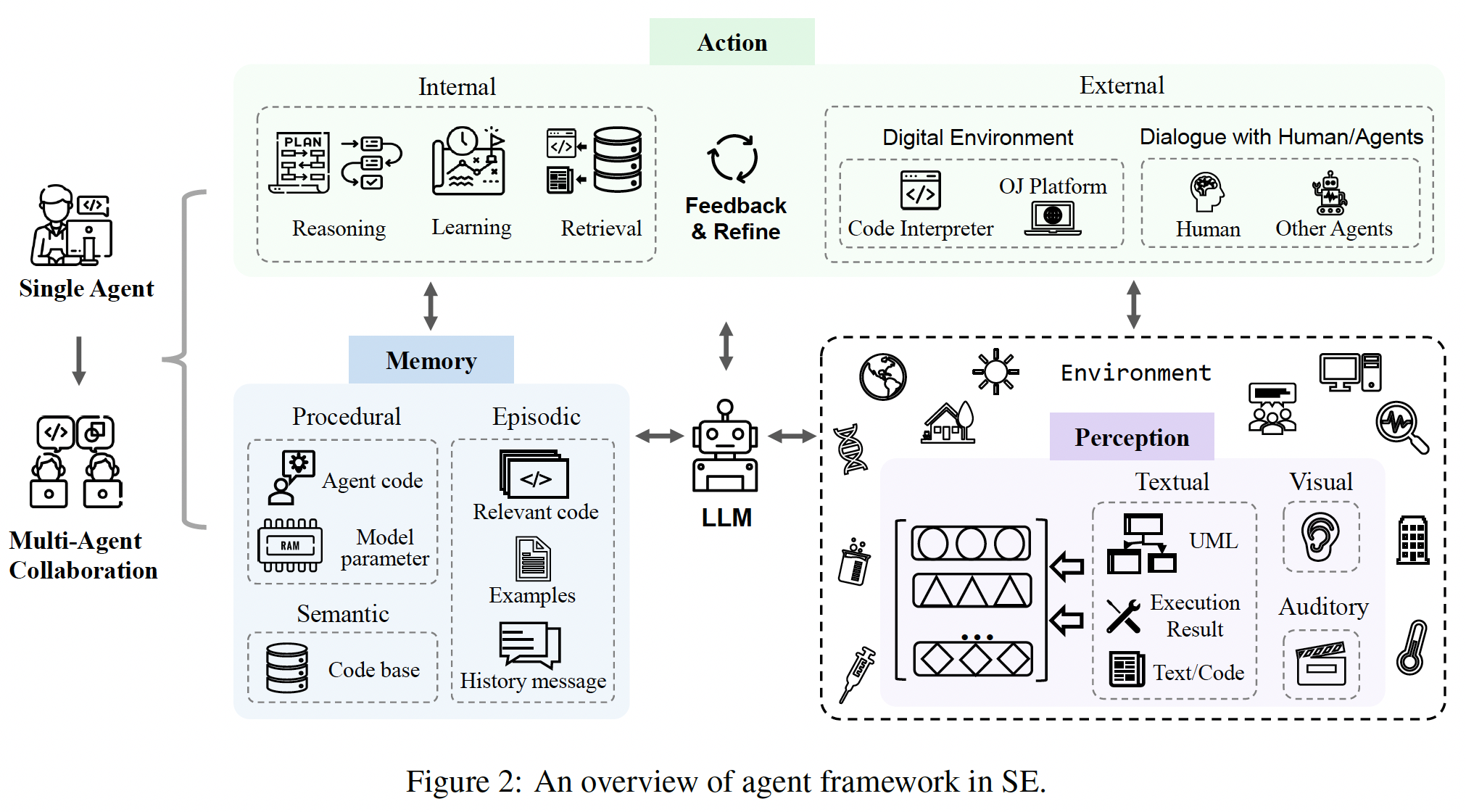

In this paper, we conduct the first survey of the studies on combining LLM-based agents with SE and present a framework of LLM-based agents in SE which includes three key modules: perception, memory, and action.

Perception#

Tip

The perception module receives external environment information of various modalities and converts it into an input form that the LLM can understand and process.

Textual Input#

Token-based Input. Token-based input is the most mainstream input mode, which directly regards the code as natural language text and directly uses the token sequence as the input of LLM, ignoring the characteristics of code.

Tree/Graph-based Input. Based on the characteristics of code, tree/graph-based input can convert code into tree structures such as abstract syntax trees or graph structures like control flow graphs to model the structural information of code.

Hybrid-based Input.

Visual Input#

Visual input uses visual image data such as UI sketches or UML design drawings as model input and makes inference decisions through modeling and analysis of images.

Memory#

Tip

The memory modules can provide additional useful information to help LLM make reasoning decisions.

Semantic Memory#

Semantic memory stores acknowledged world

knowledge of LLM-based agents, usually in the

form of external knowledge retrieval bases which

include documents and APIs. For example, Ren et al. (2023)

propose KPC, a novel Knowledge-driven Prompt

Chaining-based code generation approach, which

utilizes fine-grained exception-handling knowledge

extracted from API documentation to assist LLMs

in code generation.

Episodic Memory#

Episodic memory records content related to the

current case and experience information from previous decision-making processes.

Content related to the current case (such as relevant information found in the search database, samples provided by In-context learning (ICL) technology, etc.) can provide additional knowledge for LLM reasoning. For example, Li et al. (2023c) propose a new prompting technique named AceCoder, which selects similar programs as examples in prompts.

Procedural Memory#

Implicit knowledge is stored in the LLM parameters. Existing work usually proposes new LLMs with rich implicit knowledge to complete various downstream tasks, by training the model with a large amount of data.

Explicit knowledge is written in the agent’s code, enabling the agent to operate automatically.

Action#

Tip

The action module includes two types: internal and external actions. The external actions interact with the external environment to obtain feedback information.

Internal Action#

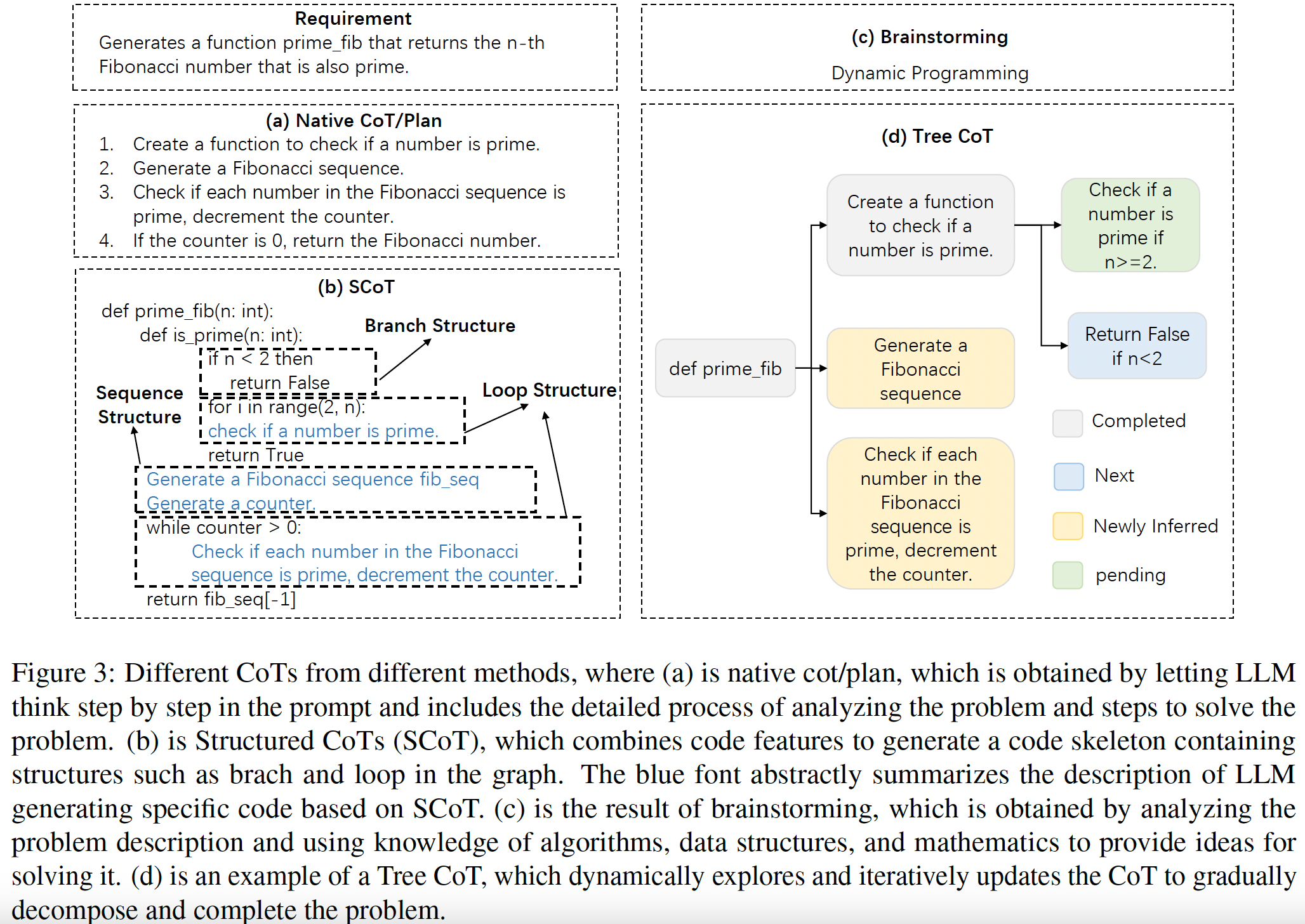

Reasoning Action. A rigorous reasoning process is the key to completing tasks by LLM-based agents and Chain-of-Though (CoT) is an effective way of reasoning. As shown in Figure 3, existing work has explored different forms of CoT.

Retrieval Action. The retrieval action can retrieve relevant information from the knowledge base to assist the reasoning action in making correct decisions. The input used for retrieval and the output content obtained by retrieval have different types. Specifically, it can be divided into the following types:

Text-Code. For example, Zan et al. (2022a) propose a novel framework with APIRetriever and APICoder modules. Specifically, the APIRetriever retrieves useful APIs, and then the APICoder generates code using these retrieved APIs.

Text-Text.

Existing retrieval methods can be divided into sparse-based retrieval, dense-based retrieval.

The dense-based retrieval method converts the input into a high-dimensional vector and then compares the semantic similarity to select the k samples with the highest similarity.

The sparse-based retrieval method calculates metrics such as BM25 or TF-IDF to evaluate the text similarity between samples.

Learning Action. Learning actions are continuously learning and updating knowledge by learning and updating semantic and procedural memories.

External Action#

Dialogue with Human/Agents Agents can interact with humans or other agents, and get rich information in the interaction process as feedback.

Digital Environment Agents can interact with digital systems, such as OJ platforms, web pages, compilers, and other external tools, and the information obtained during the interaction process can be used as feedback to optimize themselves.