DPO#

Note

DPO proposes a much simpler alternative to RLHF for aligning language models to human preferences.

Via clever mathematical insight, the authors show that given an LLM, there is a specific reward function for which that LLM is optimal. DPO then trains the LLM directly to make the reward function (that’s now implicitly defined by the LLM) consistent with the human rankings.

Preliminaries#

We review the RLHF pipeline. It usually includes three phases:

supervised fine-tuning (SFT)

preference sampling and reward learning

RL optimization

RL Fine-Tuning Phase: During the RL phase, we use the learned reward function to provide feedback to the language model. In particular, we formulate the following optimization problem

where \(\beta\) is a parameter controlling the deviation from the base reference policy \(\pi_{\text{ref}}\), namely the initial SFT mode \(\pi^{\text{SFT}}\). In practice, the language model policy \(\pi_{\theta}\) is also initialized to \(\pi^{\text{SFT}}\).

Direct Preference Optimization#

Motivated by the challenges of applying reinforcement learning algorithms on large-scale problems such as fine-tuning language models, our goal is to derive a simple approach for policy optimization using preferences directly.

Deriving the DPO objective. We start with the same RL objective as prior work, under a general reward function \(r\). We can show that the optimal solution to the KL-constrained reward maximization objective takes the form:

where \(Z(x) = \sum_{y}\pi_{\text{ref}}(y|x)\exp\left(\frac{1}{\beta}r(x, y)\right)\). Even if we use the MLE estimate \(r_{\phi}\) of the ground-truth reward function \(r^{\ast}\), it is still expensive to estimate the partition function \(Z(x)\), which makes this representation hard to utilize in practice. However, we can rearrange it to express the reward function in terms of its corresponding optimal policy \(\pi_{r}\), the reference policy \(\pi_{\text{ref}}\), and the unknown partition function \(Z(\cdot)\):

We can apply this reparameterization to the ground-truth reward \(r^{\ast}\) and corresponding optimal model \(\pi^{\ast}\). Fortunately, the Bradley-Terry model depends only on the difference of rewards between two completions: \(p^{\ast}(y_1 \succ y_2|x) = \sigma(r^{\ast}(x, y_{1}) - r^{\ast}(x, y_{2}))\). Substituting the reparameterization for \(r^{\ast}(x, y)\) into this preference model, the partition function cancels, and we can express the human preference probability in terms of only the optimal policy \(\pi^{\ast}\) and reference policy \(\pi_{\text{ref}}\):

Now that we have the probability of human preference data in terms of the optimal policy rather than the reward model, we can formulate a maximum likelihood objective for a parametrized policy \(\pi_{\theta}\):

Loss#

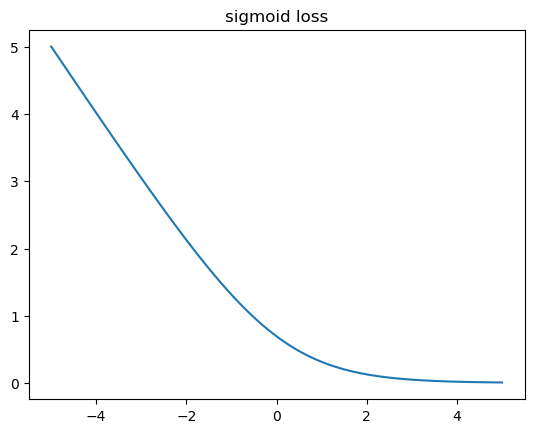

sigmoid:

%matplotlib inline

import numpy as np

import matplotlib.pyplot as plt

x = np.arange(-5, 5.1, 0.1)

y1 = -np.log(1 / (1 + np.exp(-x)))

plt.plot(x, y1)

plt.title('sigmoid loss')

plt.show()

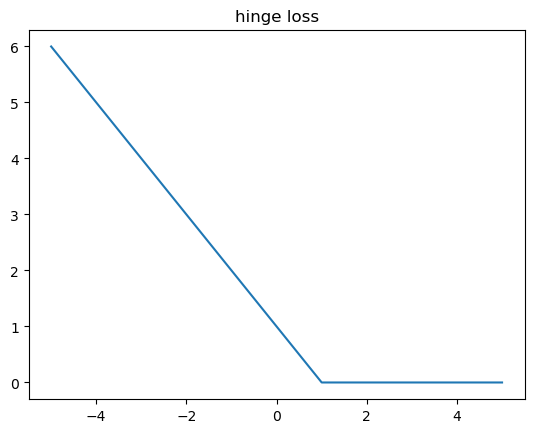

hinge:

y2 = np.maximum(0, 1 - x)

plt.plot(x, y2)

plt.title('hinge loss')

plt.show()

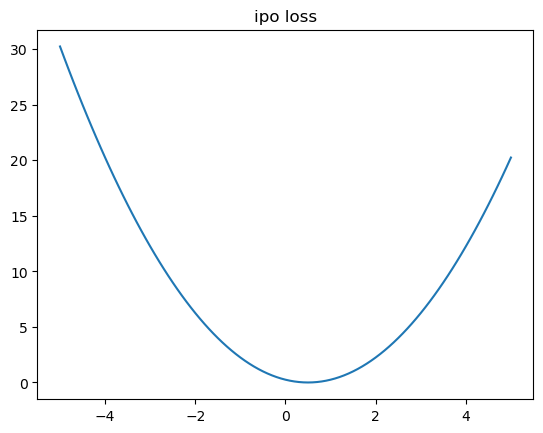

ipo:

y3 = (x - 0.5) ** 2

plt.plot(x, y3)

plt.title('ipo loss')

plt.show()

Appendix#

Derivation of \(\pi_{r}\)#

The RL objective:

where we have partition function:

Note that the partition function is a function of only \(x\) and the reference policy \(\pi_{\text{ref}}\), but does not depend on the policy \(\pi\). We can now define

which is a valid probability distribution. Since \(Z(x)\) is not a function of \(y\), we can then re-organize the final objective as:

Now, since \(Z(x)\) does not depend on \(\pi\), the minimum is achieved by the policy that minimizes the first KL term. Gibbs’ inequality tells us that the KL-divergence is minimized at 0 if and only if the two distributions are identical. Hence we have the optimal solution:

for all \(x\in\mathcal{D}\).

Gradient of DPO Loss#

where \(\hat{r}_{\theta}(x, y ) = \beta\log\frac{\pi_{\theta}(y|x)}{\pi_{\text{ref}}(y|x)}\) is the reward implicitly defined by the language model \(\pi_{\theta}\) and reference model \(\pi_{\text{ref}}\). Intuitively, the gradient of the loss function \(\mathcal{L}_{\text{DPO}}\) increases the likelihood of the preferred completions \(y_{w}\) and decreases the likelihood of dispreferred completions \(y_{l}\). Importantly, the examples are weighed by how incorrectly the implicit reward model orders the completions. Our experiments suggest the importance of this weighting, as a naive version of this method without the weighting coefficient can cause the language model to degenerate.