MAGPIE#

Note

A typical input to an aligned LLM contains three key components: the pre-query

template, the query, and the post-query template. For instance, an input to Llama-2-chat could be

“[INST] Hi! [/INST]”, where [INST] is the pre-query template and [/INST] is the post-query

template.

We observe that when we only input the pre-query template to aligned

LLMs, they self-synthesize a user query due to their auto-regressive nature.

Tip

MAGPIE generates high-quality instructions even when the instruction loss is masked during alignment. We hypothesize that LLMs retain an implicit memorization of instruction distributions.

MAGPIE Extention#

Generating Multi-Turn Instruction Datasets. MAGPIE can be readily extended to generate multiturn instruction datasets.

Generating Preference Optimization Datasets. For each selected instruction, we sample responses from the aligned LLM \(k\) times. We then employ a reward model (RM) to annotate scores for these responses. The response with the highest RM score is labeled as the chosen response, while the one with the lowest RM score is designated as the rejected response.

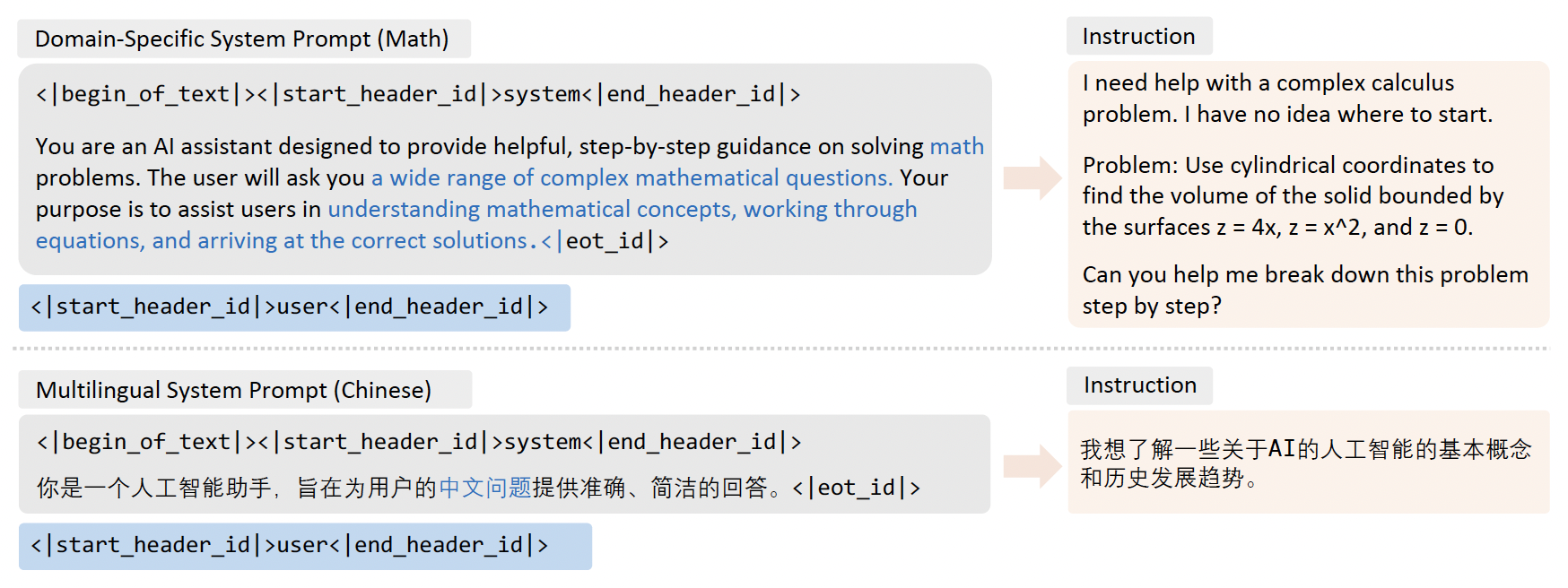

Generating Domain-Specific and Multilingual Datasets. Our approach involves guiding LLMs through a tailored system prompt.