Monte Carlo vs Temporal Difference Learning#

Note

Monte Carlo and Temporal Difference Learning are two different strategies on how to train our value function. On one hand, Monte Carlo uses an entire episode of experience before learning. On the other hand, Temporal Difference uses only a step to learn.

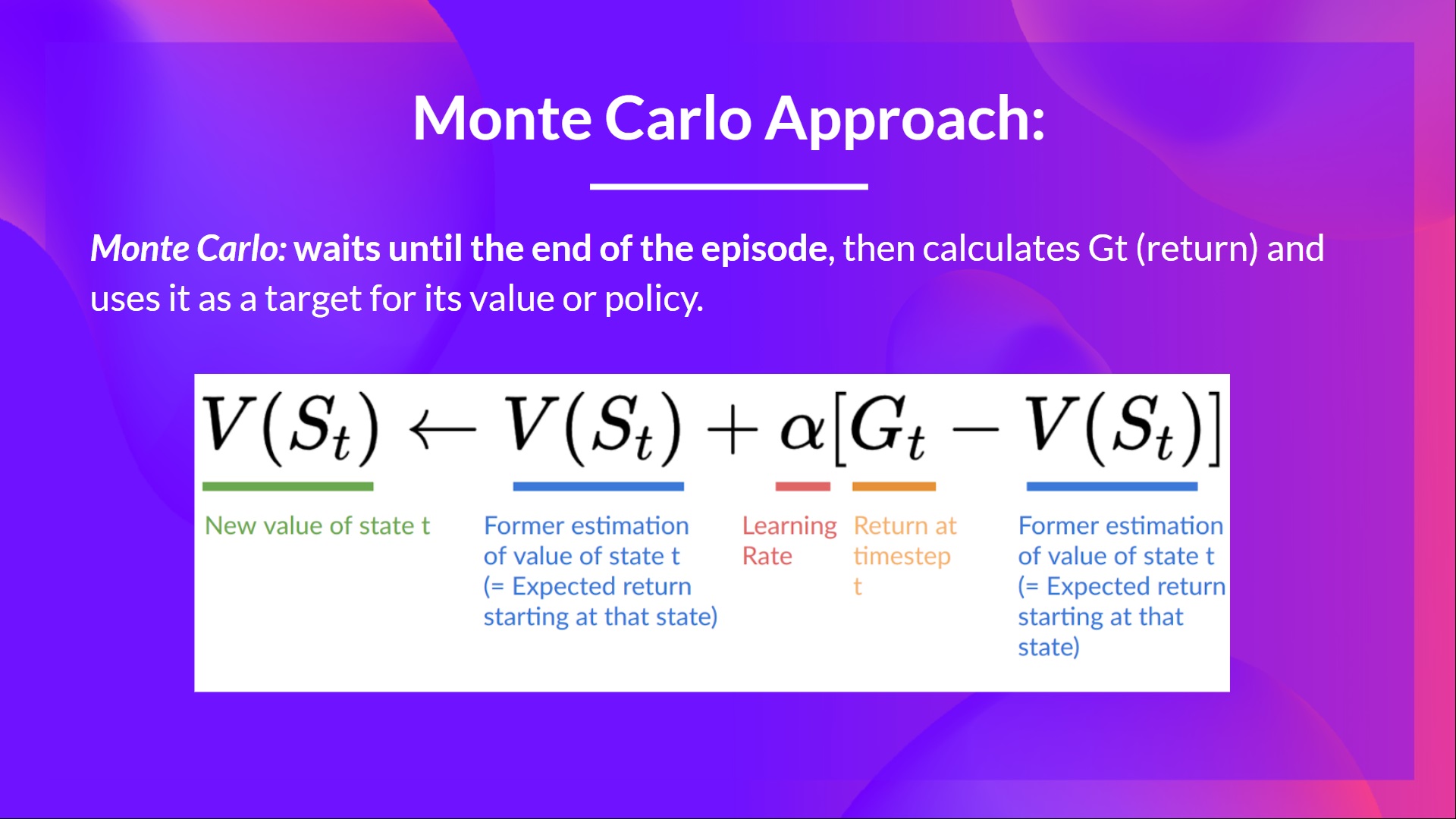

Monte Carlo: learning at the end of the episode#

Monte Carlo waits until the end of the episode, calculates \(G_{t}\) and uses it as a target for updating \(V(S_{t})\). So it requires a complete episode of interaction before updating our value function.

By running more and more episodes, the agent will learn to play better and better.

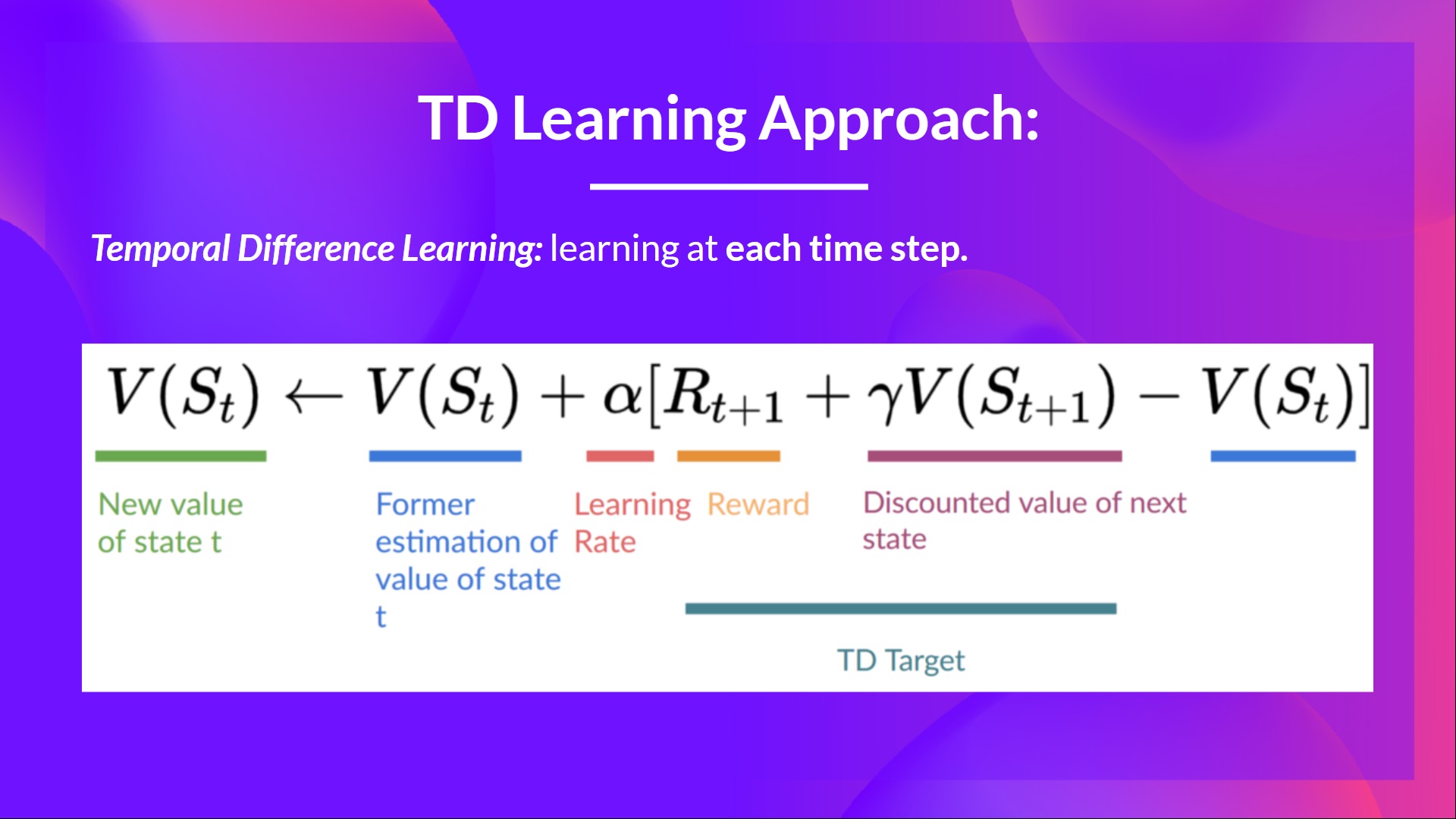

Temporal Difference Learning: learning at each step#

Temporal Difference, on the other hand, waits for only one step \(S_{t+1}\) to form a TD target and update \(V(S_{t})\) using the TD target.

The idea with TD is to update \(V(S_{t})\) at each step. But because we didn’t experience an entire episode, we don’t have \(G_{t}\). Instead, we estimate \(G_{t}\) by adding \(R_{t+1}\) and the discounted value of the next state.

This is called bootstrapping. It’s called this because TD bases its update in part on an existing estimate \(V(S_{t+1})\) and not a complete sample \(G_{t}\).

Action-value based methods#

Action-value based Monte Carlo:

Action-value based Temporal Difference learning (Sarsa):